HPCC Guides

Training documentation for Linux-based high performance compute clusters

A Brief History of HPC

High performance of computing, usually referring to a specific class of systems, also known as supercomputing, has it's roots in the 1960's with the Atlas system designed by the University of Manchester, Ferranti, and Plessey and a line of computers built by Seymour Cray at the Control Data Corperation.

Up until the 1980s supercomputers used only a few processors, and could only perform at their peak about 160 million operations per second. By todays standards this is slow, but at the time, they were a modern miracle and there was nothing better for processing data.

The Cray 1, released in 1976 quickly became the most successful supercomputer in history. Operating at 80MHz the system introduced vector processing and performance boosting vector and scalar registers which allowed results to be used without expensive memory references.

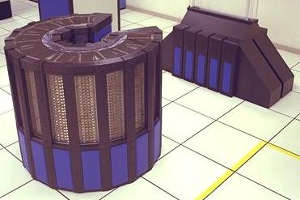

In 1985, the Cray 2 was released. A liquid cooled system with four processors, the new Cray could operate at 1.9 gigaflops (1.9 billon floating point operations per second). The Cray 2 was the first supercomputer to run "mainstream" software because of it's Unix System 5 derived operating system called UniCOS.

Supercomputing was not a technology that everyone could have access to. We often think of the high cost of HPC components today, but if we look back, the costs were very prohibitive for research beyond the big national labs, large universities and well funded private companies. Adjusted for inflation, the Cray 1 could be yours for the cost of 46 million dollars and the Cray 2, which looked like something out of a Flash Gordon, could be purchased for the price of 32 million (adjusted). Imagine, tens of millions of dollars for the compuational power equivilent to an early generation iPad.

By the late 90s it still was a sizable investment to get into supercomputing and academic researchers began to piece together commodity components to solve computationally intensive problems without the expense of a Cray or IBM. They were pseudo supercomputers for the financially challenged. These ‘clusters’ became increasingly more complex. Faster networking fabrics, faster processors and more memory pushed the boundaries of what was possible and turned into the petaflop giants who regularly show in the top 10 of the Supercomputing 500 list.

The Titan super computer shown here, can perform 27 quadrillion operations per second and in 2014 the Tighe 2 is the fastest sytsem boasting 33 quadrillion operations per second. These systems are still expensive, but the cost per operation has gone down considerably. Many Universities are the homes to smaller versions of these giants and researchers are doing some fantastic things with them.